Person Segmentation ML Application

Description

The UC Segmentation application is designed to detect and segment persons within the camera’s field of view. It generates pixel-wise masks that accurately outline each detected individual, along with corresponding bounding boxes and confidence scores. The output includes segmented regions that represent the exact shape of each person in the image, providing both spatial and confidence-level insights for each detection. This example supports both WQVGA(480x270) and VGA(640x480) resolutions.

Build Instructions

Prerequisites

Configuration and Build Steps

Select Default Configuration

make cm55_person_segmentation_defconfigThis configuration uses WQVGA resolution by default.

Optional Configuration:

💡Tip: Run

make meuconfigto modify the configuration via a GUI.Configuration

Menu Navigation

Action

VGA Resolution

COMPONENTS CONFIGURATION → Off Chip Components → Display ResolutionChange to

VGA(640x480)WQVGA in LP Sense

COMPONENTS CONFIGURATION → DriversEnable

MODULE_LP_SENSE_ENABLEDStatic Image

COMPONENTS CONFIGURATION → Off Chip ComponentsDisable

MODULE_IMAGE_SENSOR_ENABLEDBuild the Application The build process will generate the required

.elfor.axffiles for deployment.make build or make

Deployment and Execution

Setup and Flashing

Open Image Flashing Window Navigate to the Image Flashing Window on Astra SRSDK VS Code Extension. For detailed steps on generating and flashing images, refer to the Astra SRSDK VSCode Extension User Guide.

Connect Device Establish connection between your target device and the host system. refer to Quick Start Guide.

Generate Binary Files

FW Binary generation

Navigate to AXF/ELF TO BIN → Bin Conversion in Astra SRSDK VSCode Extension

Load the generated

sr110_cm55_fw.elforsr110_cm55_fw.axffileClick Run Image Generator to create the binary files

Model Binary generation (to place the Model in Flash)

To generate

.binfile for TFLite models, please refer to the Vela compilation guide.

Flash the Application

For WQVGA resolution:

Flash the generated

B0_flash_full_image_GD25LE128_67Mhz_secured.binfile directly to the device. Note: Model weights is placed in SRAM.

For VGA resolution:

Flash the pre-generated model binary:

person_segmentation_flash(448x640).bin. Due to memory constraints, need to burn the Model weights to Flash.Location:

examples/vision_examples/uc_person_segmentation/models/Flash address:

0x629000Calculation Note: Flash address is determined by the sum of the

host_imagesize and theimage_offset_SDK_image_B_offset(parameter, which is defined withinNVM_data.json). It’s crucial that the resulting address is aligned to a sector boundary (a multiple of 4096 bytes).This calculated resulting address should then be assigned to theimage_offset_Model_A_offsetmacro in yourNVM_data.jsonfile.

Flash the generated

B0_flash_full_image_GD25LE128_67Mhz_secured.binfile

Device Reset Reset the target device after flashing is complete.

Note:

The placement of the model (in SRAM or FLASH) is determined by its memory requirements. Models that exceed the available SRAM capacity, considering factors like their weights and the necessary tensor arena for inference, will be stored in FLASH.

Running the Application

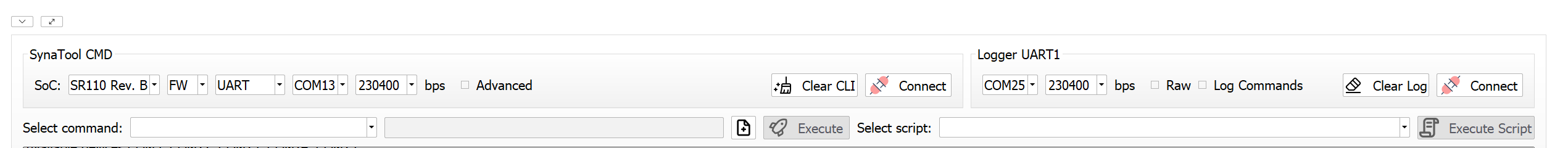

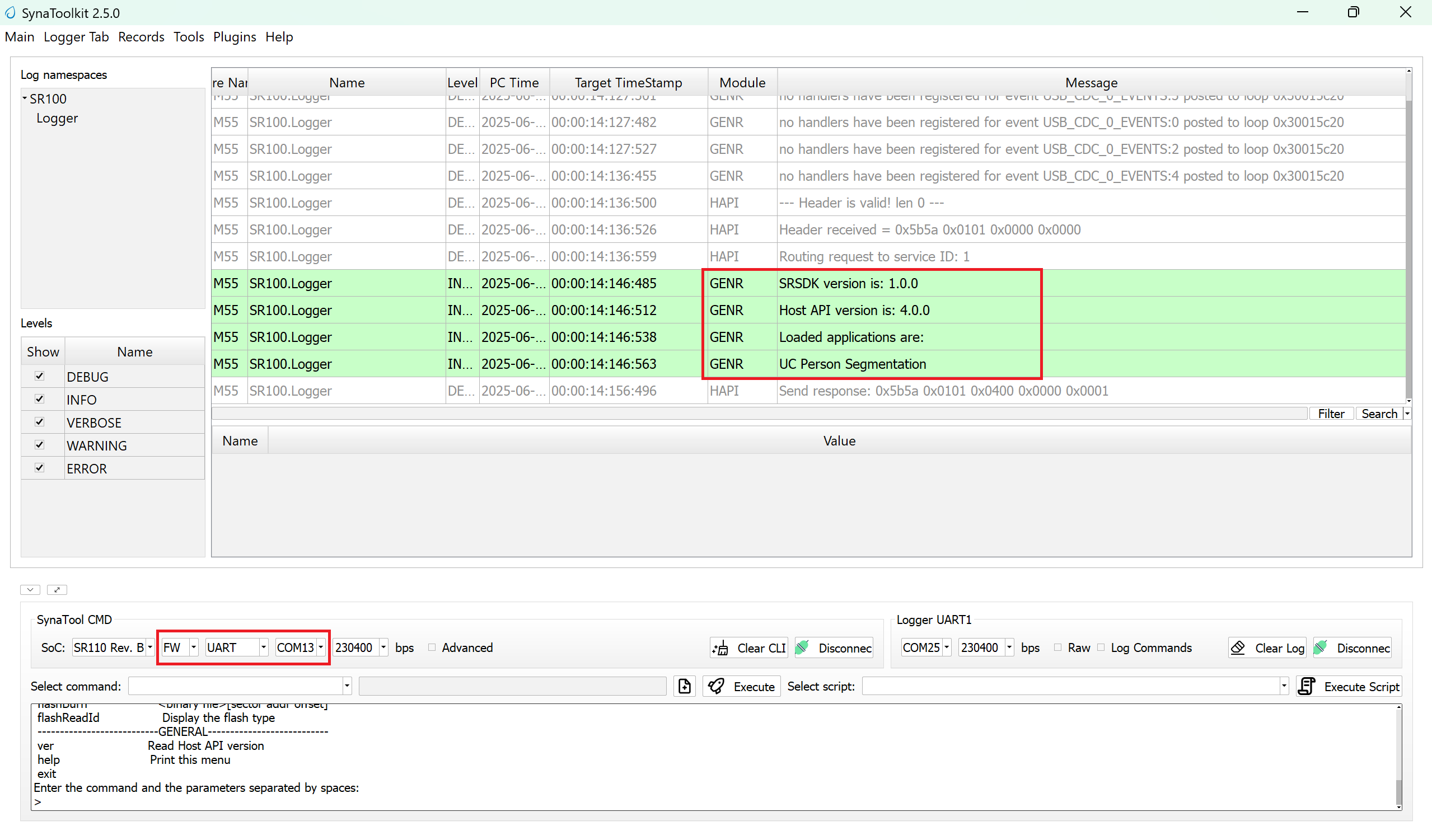

Connect to Serial Interface

Connect to the newly enumerated COM port

For logging output, connect to DAP logger port

The example logs will then appear in the logger window.

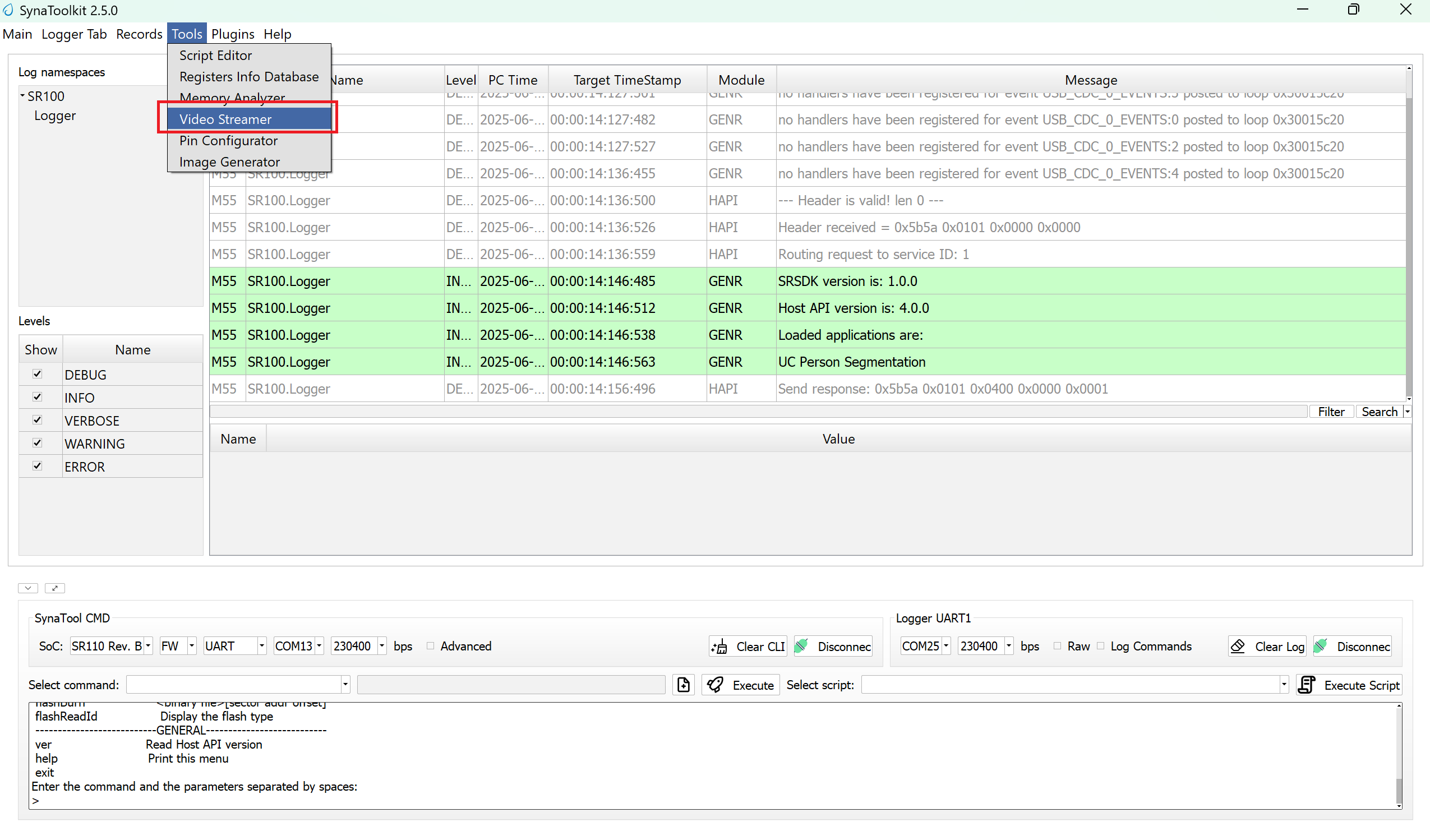

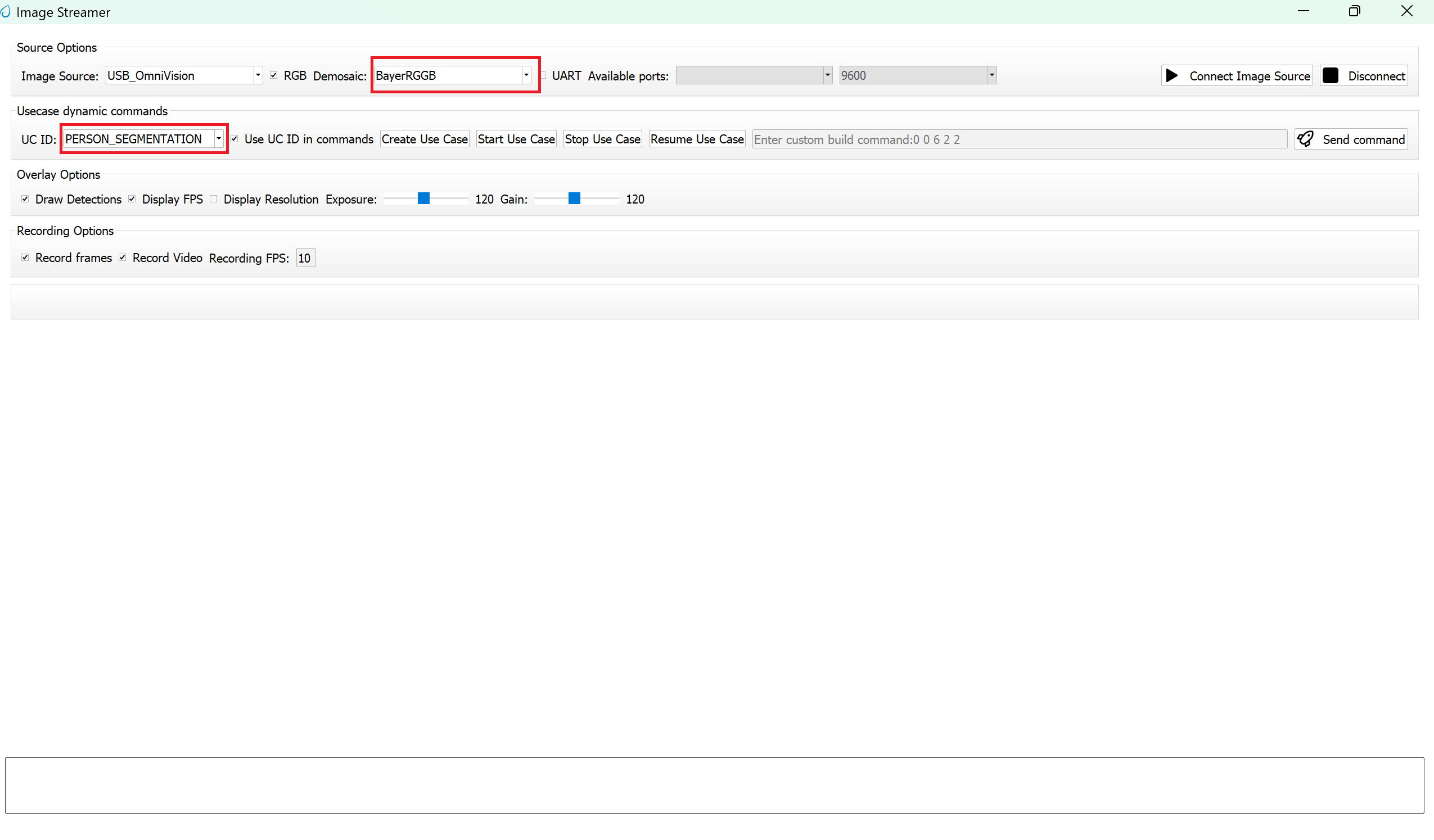

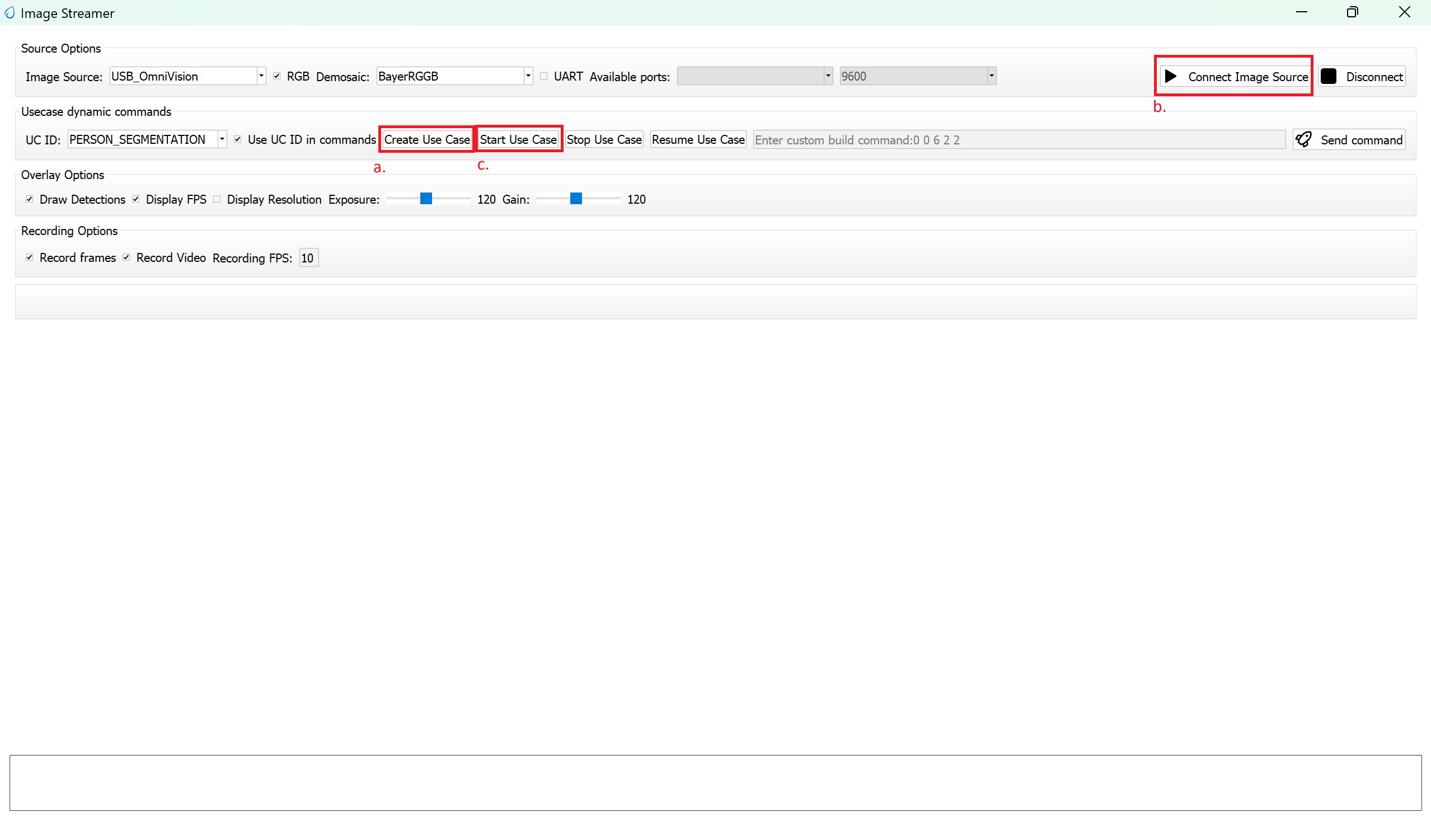

Next, navigate to Tools → Video Streamer in SynaToolkit to run the application.

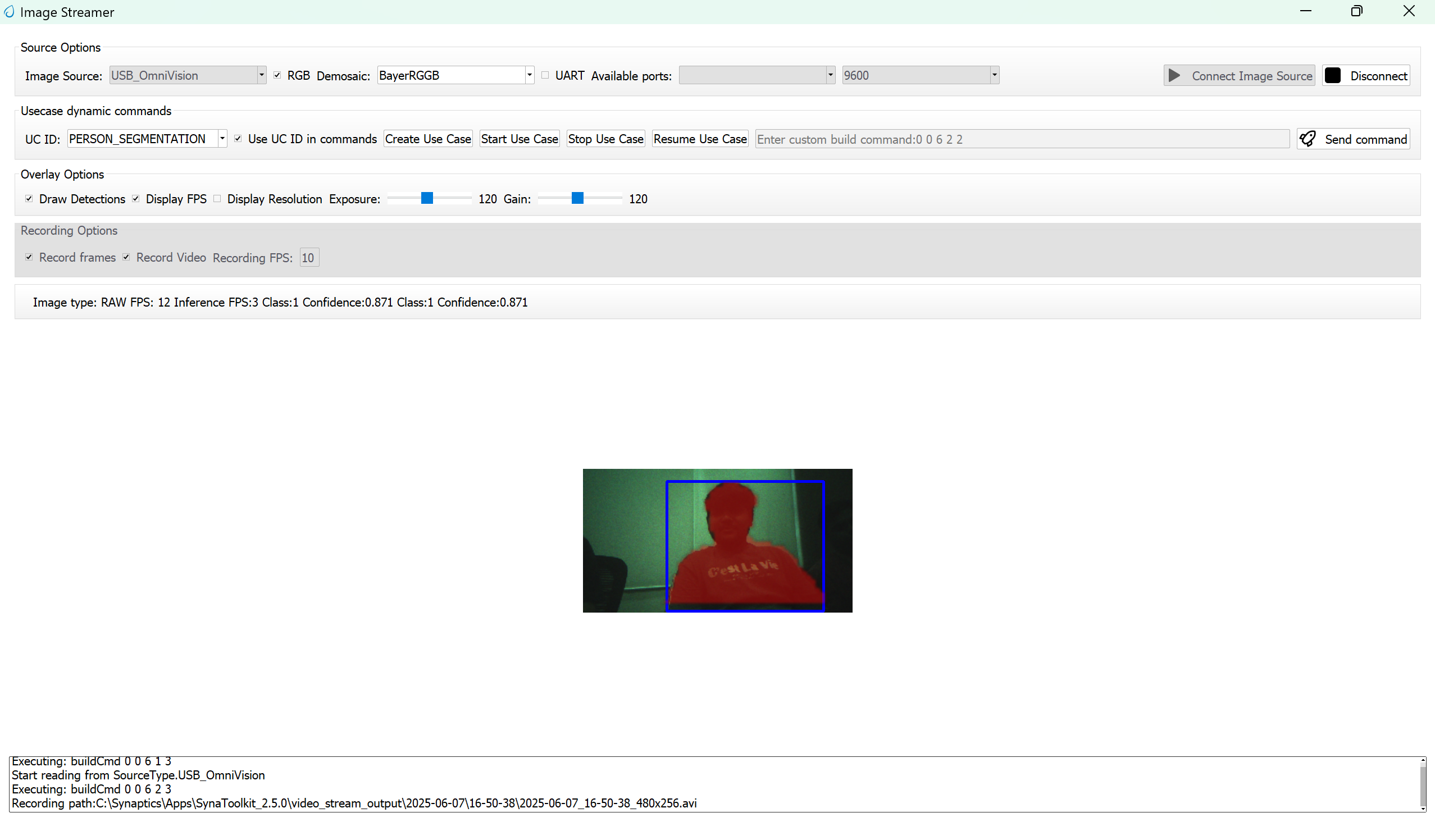

Video Streamer

Configure the following settings:

UC ID: PERSON_SEGMENTATION

RGB Demosaic: BayerRGGB

Click Create Usecase

Connect the image source

Click Start Usecase to begin real-time segmentation

After starting the use case, Person segmentation will begin streaming video as shown below.